In my last blog there was a small benchmark for the languages of scala, perl and ruby.

Many geeks on the lists have pointed out the problems and gave the suggestions for improvement. I really appreciate them.

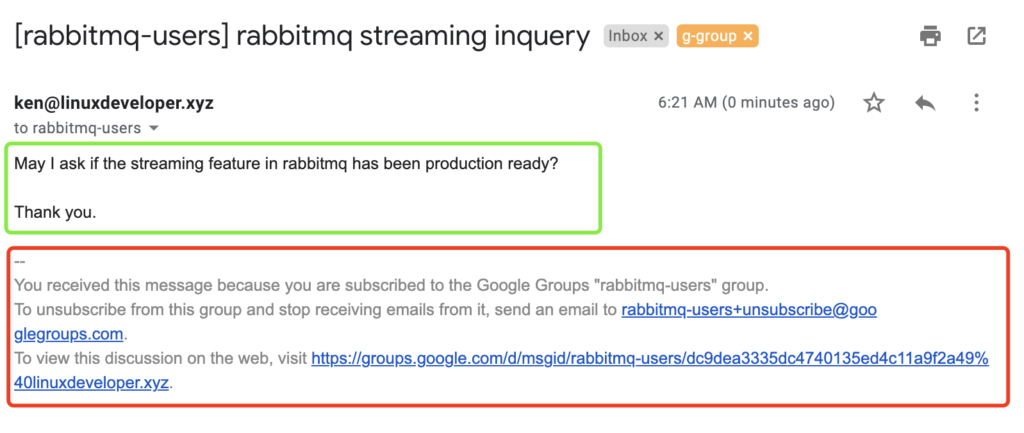

Why I want to benchmark them? because we have the real production which is using the similar counting technology. In the production, the input is the streaming. That means, every second there are the input words coming into the service. The data are coming from a message queue like Kafka or Rabbitmq or whatever. Then, we run the realtime computing on Spark. Spark reads the streaming and then run the DSL syntax for filtering and counting.

Here is the similar syntax on spark:

rdd=spark.readStream.format("socket")... # similar to words.txt

df=spark.createDataFrame(rdd.map(lambda x:(x,1)),["word","count"])

df2=df.filter(~col("word").isin(stopwords)) # filter out stopwords

df2.select("*").groupBy("word").count().orderBy("count",ascending=False).show(20)Thanks to Paul who points out that, I can use mmap() to speed up the file read. I have followed Paul’s code to test again, but it’s strange mmap() have no special values to me. Here I give the comparison.

The common-read version for perl:

use strict;

my %stopwords;

open HD,"stopwords.txt" or die $!;

while(<HD>) {

chomp;

$stopwords{$_} =1;

}

close HD;

my %count;

open HD,"words.txt" or die $!;

while(<HD>) {

chomp;

unless ( $stopwords{$_} ) {

$count{$_} ++;

}

}

close HD;

my $i=0;

for (sort {$count{$b} <=> $count{$a}} keys %count) {

if ($i < 20) {

print "$_ -> $count{$_}\n"

} else {

last;

}

$i ++;

}The mmap version for perl:

use strict;

my %stopwords;

open my $fh, '<:mmap', 'stopwords.txt' or die $!;

while(<$fh>) {

chomp;

$stopwords{$_} =1;

}

close $fh;

my %count;

open my $fh, '<:mmap', 'words.txt' or die $!;

while(<$fh>) {

chomp;

unless ( $stopwords{$_} ) {

$count{$_} ++;

}

}

close $fh;

my $i=0;

for (sort {$count{$b} <=> $count{$a}} keys %count) {

if ($i < 20) {

print "$_ -> $count{$_}\n"

} else {

last;

}

$i ++;

}The common-read version for ruby:

stopwords = {}

File.open('stopwords.txt').each_line do |s|

s.chomp!

stopwords[s] = 1

end

count = Hash.new(0)

File.open('words.txt').each_line do |s|

s.chomp!

count[s] += 1 unless stopwords[s]

end

count.sort_by{|_,c| -c}.take(20).each do |s|

puts "#{s[0]} -> #{s[1]}"

endThe mmap version for ruby:

require 'mmap'

stopwords = {}

mmap_s = Mmap.new('stopwords.txt')

mmap_s.advise(Mmap::MADV_SEQUENTIAL)

mmap_s.each_line do |s|

s.chomp!

stopwords[s] = 1

end

count = Hash.new(0)

mmap_c = Mmap.new('words.txt')

mmap_c.advise(Mmap::MADV_SEQUENTIAL)

mmap_c.each_line do |s|

s.chomp!

count[s] += 1 unless stopwords[s]

end

count.sort_by{|_,c| -c}.take(20).each do |s|

puts "#{s[0]} -> #{s[1]}"

endThe code body of ruby was optimized by Frank, thanks.

So, this is the comparison for perl (the first is the common version, the second is mmap version):

$ time perl perl-hash.pl

send -> 20987

message -> 17516

unsubscribe -> 15541

2021 -> 15221

list -> 13017

mailing -> 12402

mail -> 11647

file -> 11133

flink -> 10114

email -> 9919

pm -> 9248

group -> 8865

problem -> 8853

code -> 8659

data -> 8657

2020 -> 8398

received -> 8246

google -> 7921

discussion -> 7920

jan -> 7893

real 0m2.018s

user 0m2.003s

sys 0m0.012s

$ time perl perl-mmap.pl

send -> 20987

message -> 17516

unsubscribe -> 15541

2021 -> 15221

list -> 13017

mailing -> 12402

mail -> 11647

file -> 11133

flink -> 10114

email -> 9919

pm -> 9248

group -> 8865

problem -> 8853

code -> 8659

data -> 8657

2020 -> 8398

received -> 8246

google -> 7921

discussion -> 7920

jan -> 7893

real 0m1.905s

user 0m1.888s

sys 0m0.016sAnd, this is the comparison for ruby (the first is the common version, the second is mmap version):

$ time ruby ruby-hash.rb

send -> 20987

message -> 17516

unsubscribe -> 15541

2021 -> 15221

list -> 13017

mailing -> 12402

mail -> 11647

file -> 11133

flink -> 10114

email -> 9919

pm -> 9248

group -> 8865

problem -> 8853

code -> 8659

data -> 8657

2020 -> 8398

received -> 8246

google -> 7921

discussion -> 7920

jan -> 7893

real 0m2.690s

user 0m2.660s

sys 0m0.028s

$ time ruby ruby-mmap.rb

send -> 20987

message -> 17516

unsubscribe -> 15541

2021 -> 15221

list -> 13017

mailing -> 12402

mail -> 11647

file -> 11133

flink -> 10114

email -> 9919

pm -> 9248

group -> 8865

problem -> 8853

code -> 8659

data -> 8657

2020 -> 8398

received -> 8246

google -> 7921

discussion -> 7920

jan -> 7893

real 0m2.695s

user 0m2.689s

sys 0m0.004sI have run the above comparison for many times. The results were similar. My case shows there is no visible speed improvement by using mmap() for reading files.

The OS is ubuntu 18.04 x86_64, running on KVM VPS. There are 4GB dedicated ram, double AMD 7302 cores, 40GB ssd disk. Ruby version 2.5.1, Perl version 5.26.1.

Perl has its built-in mmap support. Ruby has mmap library installed by this way:

sudo apt install ruby-mmap2Why this happens? I will continue to research with the problem.